A practical guide for digital safety officers, journalists, and high-stakes decision-makers

1. Introduction

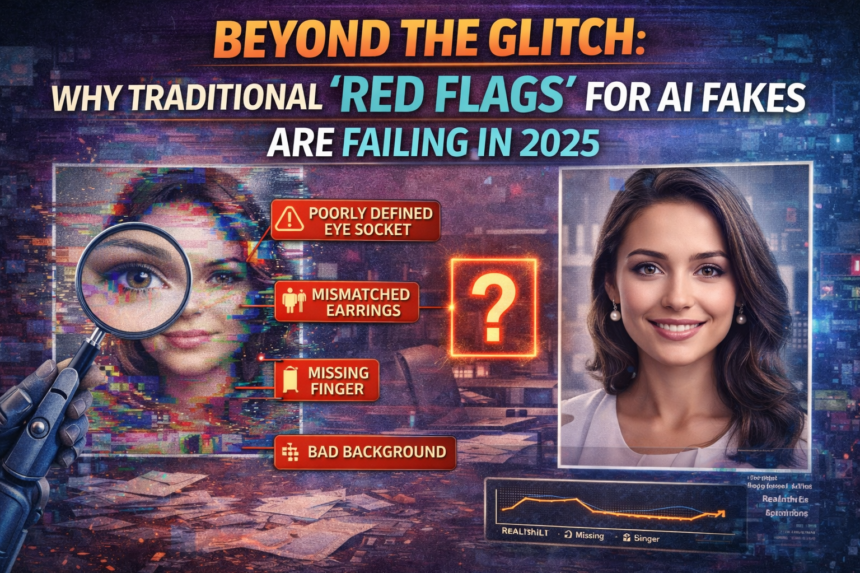

The era of “spotting the six-fingered hand” is officially over. As we move through 2025, generative models like Sora 2 and Veo 3 have refined the physical geometry of synthetic media to a point where casual visual inspection is no longer a reliable defense. What was once a technical curiosity has evolved into a sophisticated tool for financial fraud, which is projected to drive global losses toward $40 billion by 2027.

- 1. Introduction

- 2. Context and Background

- 3. What Most Articles Get Wrong

- 4. Deep Analysis and Insight

- The Collapse of Visual Intuition

- Behavioral “Stress-Testing” for Real-Time Fakes

- The Rise of Watermarking (SynthID and C2PA)

- 5. Practical Implications and Real-World Scenarios

- 6. Limitations, Risks, or Counterpoints

- 7. Forward-Looking Perspective

- 8. Key Takeaways

- 9. Editorial Conclusion

Most discussions overlook the fact that AI “fakes” are no longer just static images or clunky videos; they are integrated into multi-channel social engineering attacks. What is rarely addressed is the “Liar’s Dividend”.

The growing trend where real individuals dismiss authentic, incriminating evidence by simply claiming it was “AI-generated.” This matters because the inability to verify reality doesn’t just lead to scams; it erodes the foundational trust required for legal systems and corporate governance to function.

This article uniquely delivers an editorial breakdown of the shift from artifact-based detection to provenance-based verification. We will move past the basic “look for glitches” advice and explore the technical and behavioral frameworks necessary to navigate a world where “seeing is no longer believing.”

2. Context and Background

To understand the current state of synthetic media, we must differentiate between two types of “fakes”: Cheapfakes (content manipulated using basic software like Photoshop) and Deepfakes (content generated using Deep Learning and Generative Adversarial Networks).

The Evolution of Synthetic Deception

In 2023, the primary threat was “non-consensual imagery.” By early 2025, the threat landscape shifted toward Synthetic Identity Fraud. According to the Pindrop 2025 report, voice cloning incidents alone surged by over 400% in the insurance sector as attackers realized that mimicking a human voice requires as little as three seconds of high-quality audio.

The “Detection Gap”

The “Detection Gap” refers to the time between the release of a new generative model and the update of commercial detection software. For months at a time, high-end synthetic media can bypass standard enterprise filters because the “digital fingerprints” of the new model haven’t been mapped yet.

The Analogy of the Counterfeit Bill In the 1990s, you could spot a fake $100 bill by its feel or a blurry portrait. Today, professional counterfeiters use the same paper and ink as the Treasury. You no longer look at the paper; you look for the hidden security thread and the ultraviolet strip. Detecting AI fakes has followed the same path—we must now look for the “invisible” security layers rather than the surface image.

3. What Most Articles Get Wrong

Standard “how-to” guides on spotting AI are often dangerously outdated, providing a false sense of security.

- Misconception 1: “Look for the Blinking” Early deepfakes struggled with involuntary movements like eye blinking. Modern models have mastered these biological rhythms. Relying on “blinking” as a primary indicator in 2025 is like checking a car’s safety by seeing if the headlights turn on. It’s a baseline, not a guarantee.

- Misconception 2: AI-Generated Text is Always “Polished” While early LLMs were overly formal, the current generation can be prompted to include typos, slang, and “spiky” sentence structures that mimic human error. Detection tools that look for “too-perfect grammar” are increasingly producing false negatives.

- Misconception 3: Reverse Image Search is a “Silver Bullet” Many believe that if an image doesn’t show up in a reverse search, it’s original. However, AI creates entirely new pixel arrays. A reverse search only works if the AI was used to edit an existing photo; it fails completely against text-to-image generation where no “original” source exists.

4. Deep Analysis and Insight

The real battle for authenticity in 2025 is being fought at the Metadata and Provenance level. As visual artifacts disappear, we must focus on the “digital trail” and the “contextual logic” of the media.

The Collapse of Visual Intuition

Claim: High-resolution “laundering” is defeating human and AI detectors. Explanation: Attackers often take an AI-generated video and “launder” it by recording the screen with a physical camera or applying heavy compression filters. Consequence: This process strips away the digital artifacts (like high-frequency noise patterns) that AI detectors rely on. In 2025, low-quality, grainy video is actually more dangerous than high-definition video because it hides the technical flaws of the AI.

Behavioral “Stress-Testing” for Real-Time Fakes

Claim: Passive observation is no longer sufficient; active “challenges” are required for live verification. Explanation: During a deepfake video call, the AI is mapping a face in 2D or 2.5D. Consequence: Forcing the person on the other end to perform a “lateral 90-degree turn” or to pass their hand in front of their face can cause the “mask” to glitch. The AI cannot compute the rapid change in perspective and hand-occlusion simultaneously in real-time.

The Rise of Watermarking (SynthID and C2PA)

Claim: Verification is moving from “What does it look like?” to “Where did it come from?” Explanation: Organizations are adopting the C2PA (Coalition for Content Provenance and Authenticity) standard. This attaches a cryptographically signed “nutritional label” to a file that tracks its history from the camera sensor to the final edit. Consequence: In the near future, the burden of proof will shift. Media without a verifiable provenance chain will be treated as “guilty until proven innocent” in professional and legal settings.

5. Practical Implications and Real-World Scenarios

Scenario 1: The Corporate Wire Transfer

A mid-level manager receives a video call from the “CEO” requesting an urgent, off-cycle payment. The voice and face are perfect.

- Action: The manager should use an “Out-of-Band” verification. Hang up and call the CEO on a pre-verified, non-digital phone line, or ask a “Secret Question” that isn’t available in the CEO’s public speeches or social media.

Scenario 2: The Political News Cycle

A video surfaces of a candidate making an inflammatory statement 48 hours before an election.

- Action: Check for “Corroboration Latency.” If a major event happened, were there other cameras? If a video has only one angle and no reputable news outlet was present, the “Source Trust” is zero, regardless of how realistic the video looks.

Who is at Risk?

- Wealthy Individuals & Executives: Their high volume of public audio/video data makes them easy to “clone.”

- Elderly Populations: They are the primary targets for “Family Emergency” voice-cloning scams.

6. Limitations, Risks, or Counterpoints

A major risk of the “spot the fake” movement is the Universal Skepticism Trap. If we teach the public that everything could be fake, they may stop believing in anything. This benefits bad actors who can commit real crimes on camera and then use the “AI defense” to escape accountability.

Additionally, “AI Detectors” themselves are fallible. Current text detectors have high false-positive rates for non-native English speakers because their “formulaic” writing style can mirror AI patterns. Over-reliance on these tools can lead to unfair accusations in academic or professional settings.

7. Forward-Looking Perspective

By 2027, we expect the “Authenticity Economy” to emerge. Verification will move from a software tool to a hardware feature. Smartphones will likely come with “Hardware-Rooted Trust,” where the camera sensor itself signs every photo with a unique key, making it impossible to pass an AI image off as a “live” photo.

We also anticipate the rise of Personal Voice Passkeys. Much like a PIN, individuals will use specific phrases or frequencies that their AI clones cannot replicate perfectly, providing a secondary layer of “Biometric Truth” in a synthetic world.

8. Key Takeaways

- Move Beyond Visuals: Do not trust your eyes. Use technical tools like SynthID or check for C2PA metadata.

- Live Challenge Tactics: During suspicious video calls, ask the person to turn their head or cover their face.

- Verify the Source, Not the Content: Focus on who shared it and where it originated. If the provenance is broken, the content is suspect.

- Establish “Out-of-Band” Protocols: For high-stakes actions (like wire transfers), always use a second, independent communication channel.

9. Editorial Conclusion

In the coming years, “digital literacy” will undergo its most significant transformation since the invention of the internet. We are moving from a world of “Information Abundance” to one of “Authenticity Scarcity.” At Neuroxa, we believe the solution isn’t to build better “glitch detectors,” but to rebuild our systems of trust.

The ability to spot a fake is no longer a niche technical skill. It is a fundamental requirement for participating in modern society. As the line between the organic and the synthetic continues to blur, our greatest defense is not an algorithm, but our ability to maintain a rigorous, skeptical, and source-verified approach to everything we consume.